IEEE STEM Activity Kits Are In Demand at 150 U.S. Public Libraries

The thought of

clever roads is not new. It incorporates efforts like targeted visitors lights that instantly regulate their timing based mostly on sensor details and streetlights that routinely change their brightness to lower energy intake. PerceptIn, of which coauthor Liu is founder and CEO, has demonstrated at its individual examination observe, in Beijing, that streetlight handle can make visitors 40 p.c far more productive. (Liu and coauthor Gaudiot, Liu’s former doctoral advisor at the University of California, Irvine, normally collaborate on autonomous driving tasks.)

But these are piecemeal variations. We propose a a great deal far more ambitious method that brings together smart roads and intelligent cars into an built-in, totally clever transportation program. The sheer volume and precision of the mixed info will make it possible for such a procedure to access unparalleled stages of protection and performance.

Human motorists have a

crash fee of 4.2 accidents for each million miles autonomous cars and trucks ought to do considerably far better to achieve acceptance. Even so, there are corner conditions, these kinds of as blind spots, that afflict both of those human motorists and autonomous autos, and there is at the moment no way to take care of them with out the aid of an clever infrastructure.

Putting a ton of the intelligence into the infrastructure will also reduced the price tag of autonomous motor vehicles. A entirely self-driving motor vehicle is nonetheless rather costly to create. But progressively, as the infrastructure gets to be far more powerful, it will be feasible to transfer a lot more of the computational workload from the cars to the roads. Eventually, autonomous motor vehicles will want to be geared up with only fundamental perception and command capabilities. We estimate that this transfer will cut down the value of autonomous cars by more than 50 percent.

Here’s how it could do the job: It is Beijing on a Sunday early morning, and sandstorms have turned the sunlight blue and the sky yellow. You are driving by way of the metropolis, but neither you nor any other driver on the street has a clear perspective. But just about every motor vehicle, as it moves along, discerns a piece of the puzzle. That info, put together with details from sensors embedded in or around the highway and from relays from weather products and services, feeds into a distributed computing program that makes use of synthetic intelligence to build a solitary model of the setting that can identify static objects alongside the highway as nicely as objects that are relocating alongside each car’s projected path.

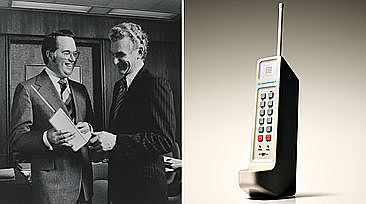

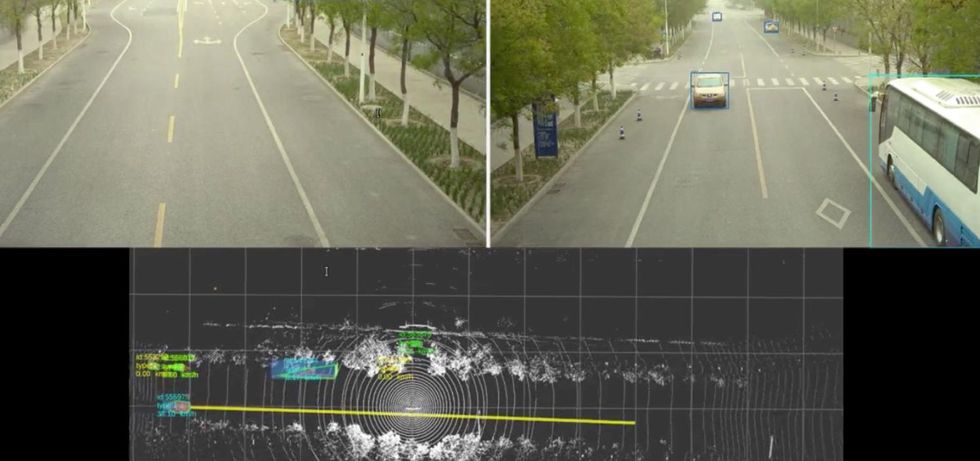

The self-driving automobile, coordinating with the roadside system, sees appropriate as a result of a sandstorm swirling in Beijing to discern a static bus and a relocating sedan [top]. The system even indicates its predicted trajectory for the detected sedan by means of a yellow line [bottom], successfully forming a semantic superior-definition map.Shaoshan Liu

The self-driving automobile, coordinating with the roadside system, sees appropriate as a result of a sandstorm swirling in Beijing to discern a static bus and a relocating sedan [top]. The system even indicates its predicted trajectory for the detected sedan by means of a yellow line [bottom], successfully forming a semantic superior-definition map.Shaoshan Liu

Effectively expanded, this technique can reduce most incidents and visitors jams, problems that have plagued street transport given that the introduction of the automobile. It can supply the plans of a self-sufficient autonomous car or truck without demanding more than any just one auto can offer. Even in a Beijing sandstorm, just about every man or woman in every automobile will get there at their vacation spot safely and securely and on time.

By placing collectively idle compute electricity and the archive of sensory data, we have been ready to boost functionality devoid of imposing any supplemental burdens on the cloud.

To date, we have deployed a product of this procedure in numerous cities in China as properly as on our take a look at keep track of in Beijing. For occasion, in Suzhou, a town of 11 million west of Shanghai, the deployment is on a general public highway with three lanes on each and every facet, with section a person of the job masking 15 kilometers of freeway. A roadside technique is deployed every 150 meters on the highway, and every single roadside process is composed of a compute device geared up with an

Intel CPU and an Nvidia 1080Ti GPU, a collection of sensors (lidars, cameras, radars), and a interaction element (a roadside device, or RSU). This is mainly because lidar provides more exact perception as opposed to cameras, specifically at night. The RSUs then talk right with the deployed automobiles to facilitate the fusion of the roadside knowledge and the automobile-side data on the car.

Sensors and relays together the roadside comprise a person 50 % of the cooperative autonomous driving technique, with the components on the motor vehicles themselves making up the other fifty percent. In a usual deployment, our product employs 20 automobiles. Just about every automobile bears a computing program, a suite of sensors, an motor management device (Ecu), and to join these components, a controller region community (CAN) bus. The highway infrastructure, as explained over, consists of related but much more superior tools. The roadside system’s large-stop Nvidia GPU communicates wirelessly by using its RSU, whose counterpart on the motor vehicle is identified as the onboard device (OBU). This back-and-forth conversation facilitates the fusion of roadside details and auto details.

This deployment, at a campus in Beijing, is made up of a lidar, two radars, two cameras, a roadside conversation unit, and a roadside laptop or computer. It addresses blind places at corners and tracks shifting obstacles, like pedestrians and automobiles, for the gain of the autonomous shuttle that serves the campus.Shaoshan Liu

This deployment, at a campus in Beijing, is made up of a lidar, two radars, two cameras, a roadside conversation unit, and a roadside laptop or computer. It addresses blind places at corners and tracks shifting obstacles, like pedestrians and automobiles, for the gain of the autonomous shuttle that serves the campus.Shaoshan Liu

The infrastructure collects information on the regional setting and shares it straight away with vehicles, thus getting rid of blind spots and usually extending notion in obvious approaches. The infrastructure also procedures info from its very own sensors and from sensors on the automobiles to extract the that means, developing what is termed semantic details. Semantic information could possibly, for instance, establish an item as a pedestrian and find that pedestrian on a map. The benefits are then sent to the cloud, the place more elaborate processing fuses that semantic data with information from other sources to deliver international perception and arranging information. The cloud then dispatches international visitors facts, navigation strategies, and handle commands to the cars.

Each individual car at our examination monitor commences in self-driving mode—that is, a amount of autonomy that today’s finest methods can regulate. Every vehicle is geared up with 6 millimeter-wave radars for detecting and tracking objects, 8 cameras for two-dimensional notion, a person lidar for 3-dimensional notion, and GPS and inertial assistance to find the automobile on a electronic map. The 2D- and 3D-perception success, as very well as the radar outputs, are fused to crank out a detailed perspective of the street and its quick surroundings.

Up coming, these notion effects are fed into a module that keeps track of every single detected object—say, a car, a bicycle, or a rolling tire—drawing a trajectory that can be fed to the next module, which predicts wherever the focus on object will go. Lastly, such predictions are handed off to the planning and management modules, which steer the autonomous automobile. The motor vehicle results in a model of its setting up to 70 meters out. All of this computation happens within just the auto by itself.

In the meantime, the smart infrastructure is executing the exact same work of detection and tracking with radars, as perfectly as 2D modeling with cameras and 3D modeling with lidar, last but not least fusing that information into a model of its personal, to enhance what every motor vehicle is accomplishing. Simply because the infrastructure is unfold out, it can model the world as significantly out as 250 meters. The monitoring and prediction modules on the cars will then merge the wider and the narrower versions into a thorough see.

The car’s onboard device communicates with its roadside counterpart to facilitate the fusion of information in the car or truck. The

wi-fi common, referred to as Cellular-V2X (for “vehicle-to-X”), is not not like that applied in phones interaction can attain as much as 300 meters, and the latency—the time it normally takes for a concept to get through—is about 25 milliseconds. This is the position at which many of the car’s blind places are now included by the system on the infrastructure.

Two modes of communication are supported: LTE-V2X, a variant of the cellular common reserved for motor vehicle-to-infrastructure exchanges, and the business mobile networks working with the LTE standard and the 5G typical. LTE-V2X is dedicated to direct communications concerning the road and the automobiles around a selection of 300 meters. Despite the fact that the conversation latency is just 25 ms, it is paired with a reduced bandwidth, presently about 100 kilobytes for every second.

In distinction, the professional 4G and 5G community have endless array and a considerably bigger bandwidth (100 megabytes for every next for downlink and 50 MB/s uplink for industrial LTE). Even so, they have significantly bigger latency, and that poses a significant obstacle for the second-to-moment choice-building in autonomous driving.

A roadside deployment at a public highway in Suzhou is arranged together a environmentally friendly pole bearing a lidar, two cameras, a conversation device, and a laptop or computer. It tremendously extends the array and protection for the autonomous automobiles on the street.Shaoshan Liu

A roadside deployment at a public highway in Suzhou is arranged together a environmentally friendly pole bearing a lidar, two cameras, a conversation device, and a laptop or computer. It tremendously extends the array and protection for the autonomous automobiles on the street.Shaoshan Liu

Be aware that when a vehicle travels at a velocity of 50 kilometers (31 miles) for each hour, the vehicle’s halting length will be 35 meters when the road is dry and 41 meters when it is slick. As a result, the 250-meter perception variety that the infrastructure makes it possible for provides the motor vehicle with a big margin of safety. On our check keep track of, the disengagement rate—the frequency with which the basic safety driver should override the automated driving system—is at minimum 90 percent reduced when the infrastructure’s intelligence is turned on, so that it can increase the autonomous car’s onboard procedure.

Experiments on our test keep track of have taught us two points. First, since targeted visitors disorders adjust through the day, the infrastructure’s computing models are thoroughly in harness in the course of hurry several hours but largely idle in off-peak several hours. This is far more a aspect than a bug because it frees up much of the great roadside computing electrical power for other duties, these types of as optimizing the method. Next, we locate that we can in truth improve the technique simply because our developing trove of neighborhood notion facts can be made use of to high-quality-tune our deep-learning types to sharpen perception. By putting alongside one another idle compute ability and the archive of sensory facts, we have been ready to enhance performance without imposing any further burdens on the cloud.

It’s challenging to get people to concur to construct a wide method whose promised gains will appear only just after it has been concluded. To resolve this chicken-and-egg challenge, we should commence by 3 consecutive stages:

Stage 1: infrastructure-augmented autonomous driving, in which the automobiles fuse auto-side notion information with roadside perception info to strengthen the basic safety of autonomous driving. Motor vehicles will even now be closely loaded with self-driving gear.

Phase 2: infrastructure-guided autonomous driving, in which the vehicles can offload all the perception tasks to the infrastructure to lower for each-car or truck deployment charges. For safety factors, standard perception abilities will continue to be on the autonomous autos in circumstance communication with the infrastructure goes down or the infrastructure alone fails. Motor vehicles will have to have notably less sensing and processing components than in stage 1.

Phase 3: infrastructure-planned autonomous driving, in which the infrastructure is billed with equally notion and setting up, hence obtaining greatest security, traffic effectiveness, and value savings. In this phase, the cars are geared up with only quite primary sensing and computing capabilities.

Technical worries do exist. The very first is community stability. At large motor vehicle speed, the process of fusing car or truck-aspect and infrastructure-side details is very sensitive to network jitters. Working with industrial 4G and 5G networks, we have observed

community jitters ranging from 3 to 100 ms, plenty of to effectively protect against the infrastructure from encouraging the auto. Even extra critical is stability: We need to assure that a hacker can not attack the interaction network or even the infrastructure itself to go incorrect information and facts to the automobiles, with perhaps deadly consequences.

A different problem is how to acquire popular aid for autonomous driving of any variety, enable alone a single based mostly on good streets. In China, 74 per cent of persons surveyed favor the rapid introduction of automatic driving, while in other countries, community support is a lot more hesitant. Only 33 p.c of Germans and 31 percent of people today in the United States guidance the rapid enlargement of autonomous autos. Perhaps the perfectly-recognized vehicle society in these two nations has designed persons more connected to driving their personal cars.

Then there is the issue of jurisdictional conflicts. In the United States, for instance, authority over roadways is distributed amongst the Federal Highway Administration, which operates interstate highways, and condition and community governments, which have authority in excess of other roads. It is not always clear which stage of governing administration is liable for authorizing, taking care of, and paying out for upgrading the latest infrastructure to wise streets. In recent situations, a great deal of the transportation innovation that has taken position in the United States has transpired at the area degree.

By contrast,

China has mapped out a new set of steps to bolster the investigate and improvement of vital technologies for clever road infrastructure. A coverage doc printed by the Chinese Ministry of Transportation aims for cooperative programs among vehicle and road infrastructure by 2025. The Chinese government intends to incorporate into new infrastructure such smart elements as sensing networks, communications units, and cloud manage techniques. Cooperation among the carmakers, large-tech companies, and telecommunications support suppliers has spawned autonomous driving startups in Beijing, Shanghai, and Changsha, a metropolis of 8 million in Hunan province.

An infrastructure-automobile cooperative driving method guarantees to be safer, far more economical, and extra inexpensive than a strictly automobile-only autonomous-driving tactic. The technology is below, and it is currently being applied in China. To do the same in the United States and somewhere else, policymakers and the public must embrace the method and give up today’s product of car or truck-only autonomous driving. In any scenario, we will shortly see these two vastly distinct techniques to automatic driving competing in the entire world transportation sector.

From Your Web site Articles

Linked Article content All around the Web